New research suggests an AI agent can’t fully replace a human consultant — at least for now.

Mercor, the AI training giant, tested how well leading AI models, acting as agents, performed real-world consulting, banking, and legal tasks.

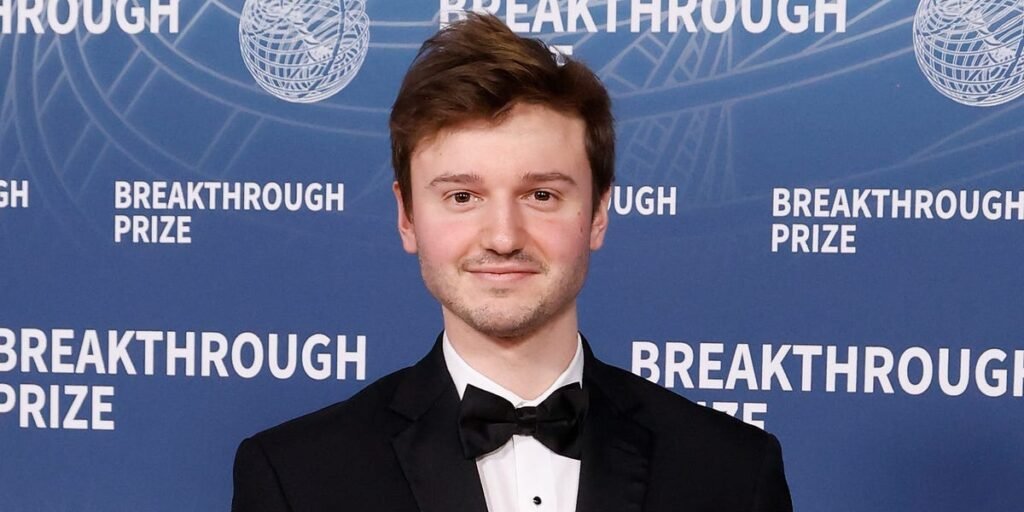

The models failed most of the time, but Mercor’s CEO, Brendan Foody, told Business Insider that the results tell only part of the story.

The consulting tasks in Mercor’s APEX-Agents benchmark were designed to simulate real management consulting work, based on expert surveys and input from consultants at McKinsey, BCG, Deloitte, Accenture, and EY.

Across all task categories, the AI agents successfully completed the tasks less than 25% of the time on the first try. Given eight attempts, the agents could only complete 40% of the tasks. For the management consulting tasks, OpenAI’s GPT 5.2 initially performed the best, completing nearly 23% of the tasks on its first attempt. Anthropic’s Opus 4.6, released this week, performed even better at nearly 33%.

While many of the tasks were not completed, Foody said the success rate for GPT 3 was only 3%, compared to 23% for GPT 5.2. Anthropic’s model went from 13% to 33% on consulting tasks in a matter of months. Foody said he expects the success rate of the models to be closer to 50% by the end of the year.

“These are some of the hardest tasks in the economy that people pay millions of dollars to consulting firms to do, and the models are finally being able to do them with an incredible rate of progress,” Foody said.

AI has already disrupted the consulting industry, changing the way firms hire and make money, but the likelihood of agents displacing consultants grows as the models continue to improve.

McKinsey chief Bob Sternfels recently said the prestigious management consulting firm had 60,000 employees, 25,000 of which were AI agents.

Sternfels recently said it’s the first time in McKinsey’s history that the company is able to grow without growing its head count.

Where AI agents fail in consulting tasks

The frontier models Mercor tested included those from OpenAI, Google, and Anthropic, among others.

One example consulting task instructed the AI agent to “analyze category consumption patterns and market penetration using the Category Penetration Score methodology for PureLife’s portfolio strategy,” asking for several specific outputs in response. The AI agents failed to produce an accurate response.

“No model is ready to replace a professional end-to-end,” the findings concluded.

Mercor found the AI agents were great at research and pretty good at data analysis, Foody said.

Where they consistently got tripped up was on longer-horizon tasks — the longer it would take a human to complete a task, or the more steps it took, was the biggest indicator that the model might have a hard time.

Unlike a human, Foody said, the models struggle to understand where in a specific file system they should look for the right information, so they often end up looking at the wrong files. They struggle with the planning side of figuring out how to work with multiple tools and cross-referencing files at the same time.

For tasks that can be done in an hour or less or that only require the use of a single tool, the models perform relatively well.

Foody said the agents are almost like interns, where they might have a 50% pass rate, and the partner is still noticing a lot of issues in the work.

Frank Jones, a former KPMG consultant who now works as an expert contractor for Mercor, said in his experience training AI, he’s found the models can get close at certain tasks, but that some human refinement is often needed.

He also said the models need very specific prompts because they don’t always understand common expectations or phrases in consulting, like “client-ready.”

“Most consultants, they know what that means. But for AI, I think there’s a lot of nuance in that,” he said.

The AI models are quickly improving

According to Foody, continuing to improve the models doesn’t require a breakthrough — it requires more and better training, which the frontier labs are already investing heavily in.

“That’s why we have so much revenue,” he said, adding, “We’re in the business of replacing human judgment.”

Mercor, whose clients have included OpenAI, Anthropic, and Meta, secured a funding deal in the fall that valued the company at $10 billion. Mercor employs more than 30,000 contractors around the world who help train AI models through tasks like rewriting chatbot responses. Foody previously said the company grew its revenue in 2025 by 4,658%.

Foody said he believes consulting, and especially lower-level roles, are among the jobs he’s confident will be displaced by AI. He said the next version of the AI agents benchmark will expand to evaluate the whole value chain of a professional services firm: “Instead of evaling the analyst, we’re evaling McKinsey itself.”

Right now, he says Mercor’s AI agent benchmark tells an appealing story for McKinsey, because the company could say it shows they can use AI to add value but not replace humans.

“The next version of APEX tells a very scary story for McKinsey,” he said, adding, “In the coming two years, we’re going to have chatbots that are as good as the best consulting firm.”